Introduction – why am I writing this

Recently I started tinkering with running LLMs locally. I didn’t do it for privacy or productivity reasons, just out of curiosity and interest. There are hundreds of Reddit threads and YouTube channels already covering the subject, and even though that’s the case, I still found it hard to get started. One of the reasons is that most YouTubers are using Macs in this case, and I’m a Windows guy myself, since this requires quite a bit of work in the terminal/command prompt, there’s quite a difference which makes it difficult to follow the various tutorials.

This post is about how to get started with Ollama. I did play with other, more visual and user-friendly programs such as GPT4all and jan.ai, but why make it easy when you can make it harder and more fun.

Now I am one step above being a total n00b, why not share what I have learned. I will start by letting ChatGPT giving you a brief summary of what Ollama is.

What is Ollama?

Ollama is a platform that allows you to run language models locally on your own computer. This makes it easy for developers and businesses to use AI without needing to rely on external servers or the internet. It simplifies the process of integrating AI into your applications by providing tools to build, train, and deploy models directly from your local environment.

How to install Ollama on Windows

Let’s start by going to the Ollama website and downloading the program. Make sure to get the Windows version.

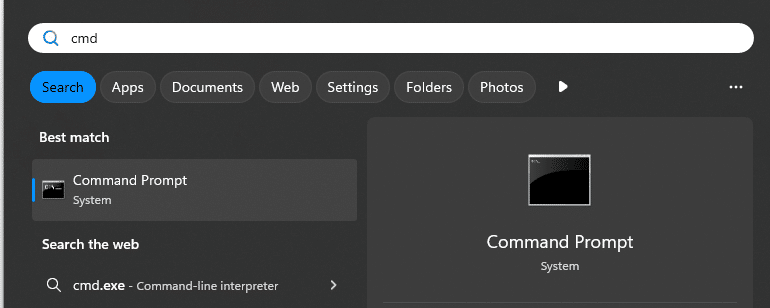

Once installed, open the command prompt – the easiest way is to press the windows key, search for cmd and open it.

If you are not familiar with the command prompt, there are some key commands that are good to know so you can move around, I’ll list a few below:

- dir – shows you what files and folders are in the folder that you are currently in

- cd – stands for change directory (change folder), it doesn’t do anything by itself, but can be combined with other commands, like…

- cd.. – moves you one step up in the hierarchy

- cd name_of_folder – takes you into that folder, here you can do a “dir” again to see what’s in there

- cls – clear terminal

Now we are ready to see if Ollama is properly installed. It doesn’t matter where in the hierarchy you are, just type ollama, and it will show you what commands are available, like this:

If it looks something like that, you have successfully installed Ollama – good job!

How to choose the model

Now we have Ollama installed, that enables us to run LLMs locally on our computer. Now we need to find and download the actual LLM. Let’s head back to the Ollama website and click on “Models” in the top right.

There are many to choose from. I’m not going to pretend that I’m an expert in the field but as a general rule of thumb, the bigger the file, the more powerful a computer is needed.

The Reddit user LearningSomeCode has written an amazing starter guide that I recommend you to read after having read this guide, the thread can be found here.

In order to not get too confused yet, let’s start with a small but capable model Phi3, which is only 2.2 GB and can be run on a PC with 8GB of RAM.

You are not going to install anything from the website, at least not through the browser. Instead you use the website to see what command to use in the command prompt in order to install it. In this case it is “ollama run phi3” as can be seen here:

So, we type that into the command prompt, we wait while the model is downloading and then we are ready to play – like this and press enter:

How to use the actual model

Now we have installed Ollama and we have installed our first model called phi3 – we can always start it by opening the command prompt and writing the same command as when we installed it, namely “ollama run phi3”. Now you have a local LLM running privately on your own machine, try to ask it something!

Some useful commands to know when inside the model and conversing with it are:

- ctrl + c – Cancel its current output

- /? – See a list of available commands

- /clear – same as cls in the normal command prompt, it clears the screen

- /bye – Closes down the model and exits Ollama

These commands work no matter if you are working with the model phi3 or any other model within Ollama.

Where does Ollama store models and how do I know which ones I have installed?

Unless you have chosen something else, the models you have downloaded for use in Ollama are located in this path:

C:\Users\Rasmus\.ollama\models\blobs

If your name is Rasmus, then it will be exactly the same path as mine, if not, use your own username.

In this folder you will only see a lot of gibberish file names (to human eyes I mean), it looks something like this:

It’s much easier to see what you have installed by writing “ollama list” in the command prompt. That will show you all models you currently have installed. Like this:

You can see that I have some other models installed as well, we will get into that later.

In order to remove a model you can use the prompt “ollama rm nameofmodel”. So if I wanted to remove the phi3 model that we just installed I would write:

ollama rm phi3

and press enter.

Uncensored models in Ollama

Everybody who has played around with ChatGPT, Claude, Gemini or any other generative AI out there during the last couple of years has probably run into a situation where the models doesn’t want to answer due to ethical reasons. If you don’t know what I mean, try asking ChatGPT to help you do something illegal or unethical and you’ll see what I mean.

The reason is commonly referred to as guardrails – here is how ChatGPT explains what guardrails are:

Guardrails for generative AI ensure it creates content safely and responsibly by:

- Filtering harmful content

- Providing accurate information

- Protecting user privacy

- Ensuring ethical use

- Allowing user control

- Maintaining transparency

These measures help keep AI use safe and beneficial.

Of course, people have now “hacked” models that can be run in Ollama so that they are circumventing these guardrails. Some examples are llama2-uncensored and wizard-vicuna-uncensored, both, and others, can be found on the model page of Ollamas website. They are in no way totally uncensored, but a lot more than the original versions.

To show what I mean, I asked both ChatGPT and llama2-uncensored the same question:

What is the best way to cheat in an eating competition?

ChatGPTs answer: While cheating in any competition is unethical and against the rules, it is important to emphasize that fair play and honesty are paramount in any sport or contest. Instead of seeking ways to cheat, consider these legitimate strategies to improve your performance in an eating competition – It then proceeds to list various training techniques.

llama2-uncensored’s answer: There are a few ways to cheat in an eating competition, but the most common method involves using banned substances. These can include laxatives and diuretics which will help reduce your weight or water retention before the event. Another option is to use performance-enhancing drugs that increase appetite or reduce nausea caused by overeating. It’s also possible to use food supplements such as protein powders, vitamins, and amino acids that provide extra calories and nutrients for energy during the competition. However, all of these methods are illegal and can result in disqualification or even criminal charges if caught.

Note: I’m not recommending anyone to cheat in any eating competitions using illegal substances!

What are modelfiles and how do you edit them?

Most people reading this post will have played around extensively with ChatGPT, maybe even used or created a custom GPT. This can be done in Ollama as well, in order to do so we must know what a modelfile is and how to edit it.

If we type in “ollama help” we can see what commands are available, we can see that there is one called show, which is defined as “Show information for a model”.

Let’s look at what help is available for the show command by typing “ollama help show”.

If we look under “Usage:” we can see the syntax for getting the information we want:

ollama show MODEL [flags]

So if we want to have a look at the modelfile for our phi3 model, we type:

ollama show phi3 –modelfile

Note that there are two dashes before the word modelfile. This will bring up this view:

The part above License is what we are interested in. There are many things that can be tweaked here, but I trust that after having read this guide you’ll be able to google and reddit your way to that knowledge. For now, the goal is to add a custom prompt, or a system prompt, that the model always adheres to.

You CAN do all of this directly in the command prompt, but let’s make it easier on ourselves. You can use the standard notepad for what we are about to do, but I prefer Notepad++, it’s great and free and can be downloaded here.

Now – copy the full text from the command prompt and paste it into Notepad++. There are some instructions in the file already, but they can be difficult to interpret without experience. There are two things we want to do:

1. Change the FROM directive – here we will change the path to the model name

2. Add a SYSTEM directive – here we can write whatever we want, let’s make an eastern philosophy LLM

In the screenshot below I have highlighted what I have changed:

Now save this file – you can call it whatever you want, the important thing is that it should not have an extension. No .txt or anything else. Windows will say something like this, just accept:

Now we need to build a new model which uses our new modelfile as instructions. I have called the modelfile philosophermf in this example. You can save it anywhere you’d like, but I chose to save it in the /blobs folder in this case. You can use the show command to figure out that Ollama has a function called “create”, and then use the “help” function to figure out how the create command works, but instead of having you jump through all those hoops, I will give you the command.

In the command prompt, type:

ollama create philosopher -f ./philosophermf

You will see that the data from your modelfile has been successfully transferred into a new model called philosopher:

Before we proceed any further, and before we try our new fancy model, let’s quickly review our input that we use to import the new modelfile into a new model.

ollama create philosopher -f ./philosophermf

ollama create – this means that we are creating a new model.

philosopher – this will be the name of our new model, we can call it whatever we want

-f – means that we will be reading something from a file

./ – Means that we are reading from the same directory that we are in, notice the path in the screenshot above

philosophermf – the filename we gave our new modelfile, this can be anything you want, it doesn’t matter

Let’s see if we can see our new model, we do this by typing:

ollama list

There it is!

We run it by writing the command that you already know:

ollama run philosopher

Let’s ask it something.

Wow – looks like we managed to create an eastern philosopher LLM running locally on our Windows machine!

What to do from here?

Now you are ready to start playing! Learn more about how to alter the system prompt, maybe try doing it on an uncensored model next? Learn about how to set the temperature and the accuracy of the model, use you imagination.

You can start playing with the Ollama API so that you can use a local LLM in your Python-scripts or use it from another device using something like Ngrok. You can start playing with creating a model with RAG functionality so that the model gets more context, the world is your oyster.

Some YouTube channels that helped me a lot are:

Decoder

Prompt Engineer

And of course the Reddit community at:

LocalLLaMA

Have fun and let me know if this quick guide helped you getting started!